Featured work using Three.js

WebGL is transforming how we experience visual content online. Storytellers in the music video, commercial, and film world are all jumping on board. But what’s possible and where are things headed? I asked San Francisco-based creative coder/technologist Michael Chang, aka Flux, with whom I graduated UCLA Design | Media Arts, to shine a light on his work and the challenges of the medium.

How would you describe WebGL, it’s connection to OpenGL, and how it can be utilized and engaged by creatives?

Michael Chang I think there are some exciting times ahead of us with WebGL — the fact that so many computers out there are now capable of displaying high performance graphics, except that it’s been locked away behind the curtain in the realm of desktop applications.

Obviously not everything needs to be in 3D, or even graphics accelerated, but there are many situations where this would benefit content on the web, for example the display of weather and storm systems, mapping geographic data, or when it’s suitable to show several million concurrent points of data at once. We’re only starting to see the tip of the ice-berg right now, things will really take off when libraries like THREE.js and Processing.js begin to mature and start getting used to put a lot of creative coding content directly on the web.

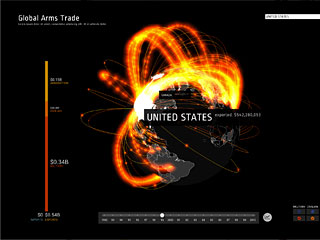

Arms Trade Visualization

What is your favorite part of being a Creative Technologist on the Data Arts Team at Google? How does that job relate to your recent projects such as “Generative Machines” and “Small Arms Imports / Exports”?

MC Probably the team itself, Aaron Koblin is a wonderful at getting the right connections going and providing direction, and those who I have the privilege to sit alongside and work with — Jono Brandel, Doug Fritz, Ray Mcclure, and George Brower, all really talented, have great ideas, and give me a lot of inspiration and feedback.

Data Arts Team is pretty unique, what with the responsibility of “making epic shit”. One of the outlets of our work is Chrome Experiments, and that gives me some breathing room to explore ideas, for example Generative Machines is one that originally was going to be a piece for Google IO 2012, but still serves as a demonstration for how far WebGL has come and what modern web browsers are capable of.

Generative Machines

Only Google Chrome and Mozilla Firefox enable WebGL by default, while browsers like Microsoft Internet Explorer are unable to display the content without a work-around plug-in. How does that limitation inspire development to become a standard for 3D? How are you working around it?

MC I can’t speak for Data Arts Team, however in general we try to step in the direction of pushing the envelope as far as we can given what we can work with. Unfortunately this means having to leave IE behind in most cases.

ROME “3 Dreams of Black”

In 2011, projects like ROME “3 Dreams of Black”, which features Jack White, Norah Jones, Daniele Luppi, and Danger Mouse, were some of the first for video to demo WebGL. Over a year later, how have things changed in terms of what’s possible and what’s still not possible?

MC Some new, up and coming tech that’s going to be exciting to explore is WebRTC and its ability to link people together on a peer to peer basis. Traditionally the content has always been viewer<--->server, however it would be interesting start exploring the space of viewer<--->viewer.

The Wilderness Downtown

Google is clearly investing in creative experiments, rather than purely technical demonstrations. Director Chris Milk is no stranger to web-based music videos, like “The Wilderness Downtown” which was “made with friends” at Google. OK Go also worked with Google for “All Is Not Lost”. Being open source, how is WebGL accessible to other creatives not working directly with Google? What are some of the hurdles to overcome, either with the learning curve or restraints of what’s do-able?

MC Paul Irish has been working his butt off on making a lot of this more accessible for other developers with HTML5Rocks.com and having developers post how-tos and general good information spreading. It’s definitely important to not only make demos, but also show the process to others so that other creatives can push the envelope along.

HTML5’s WebRTC make it easy for webcam recognition. How could this be used for further creativity, as opposed to basic demos showing that your webcam works?

MC The thing that’s exciting to me about WebRTC camera tracking stuff is how it makes the camera into another input device that, hopefully, doesn’t just become another pointer or mouse. There’s definitely more data there, more that a developer can work with, for example picking out distance based on size of objects, orientation, and color. This begins to fall under the think-space of companies like G-Speak who do a lot of that kind of work, and it would be great if they can start to do that on the web as well.

That being said I haven’t seen any demo yet that has excited me with WebRTC, it can be pretty challenging not to look like every other augmented reality demo!

Chrysaora

WebGL seems to thrive the most with interactivity. But projects like “Chrysaora” use it as a visual effect. Where is the point of no return to use WebGL with interactivity or without? And what are some of the pros and cons from a developer’s perspective, in returns of complexity, resources, and so on?

MC Chrysaora’s author Aleksandar Rodic has worked with us before :) I really admire his work.

Adding interactivity to any project definitely adds a huge layer of complexity, and that definitely depends on the context of the project and what that’s involved with. At some point with a non-interactive piece, you sort of begin asking yourself why not just make this a video in After Effects or something.

There’s also this whole other aspect of Shader/GL Demo Scene who’s work is all wrapped up visually delicious, totally non interactive experiences.

The work I’ve been doing recently has been focused on visually demonstrating something that’s hard to see, for example arms trade around the world, and with subjects like that I tend to favor minimal interactivity and constrain / limit the user’s input as much as I can without hampering the display of data. One has to imagine the context in which someone is viewing work like this: their co-worker probably sent them a link and they click on it, wait for it to load, and have maybe 20-30 seconds to decide if they want to keep looking. Basically all the context needs to be established right away, and they’ll probably stay a few more minutes to experience what you have to offer. It’s definitely *not* like a game where the experience is made for you to stay and keep seeing new things, so the amount and types of interaction is built around this thought process.

Medal of Honor Warfighter

How do you see WebGL bonding more with cinematic and narrative video, whether it’s a martial arts pic or more music videos?

MC Right now we’re seeing more ad agencies use this to promote things, like for example most recently EA used WebGL in their Medal of Honor: Warfighter stat page with a whole spinnable, explorable 3D globe. I’m secretly hoping Pixar would do a short some day directly in WebGL — it would be interesting to see the likes of Brad Bird do interactive short stories on the web.

Commentary

Got something to add?